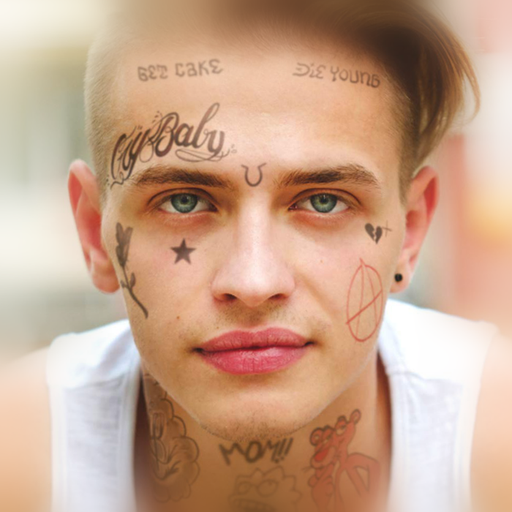

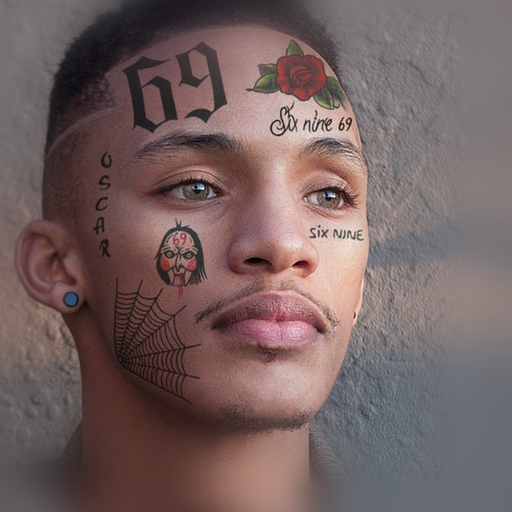

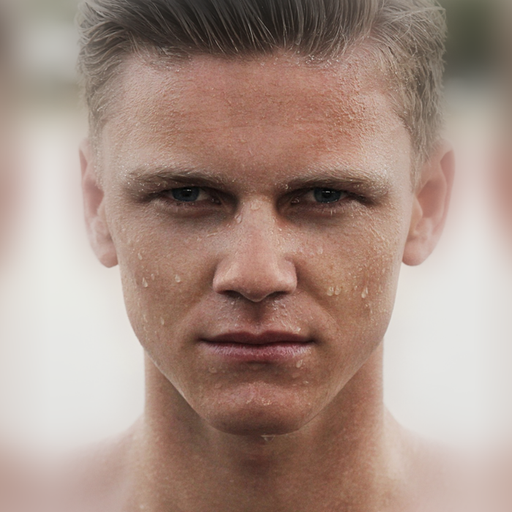

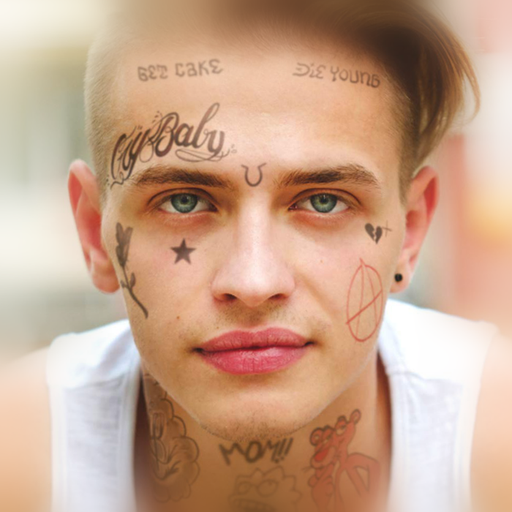

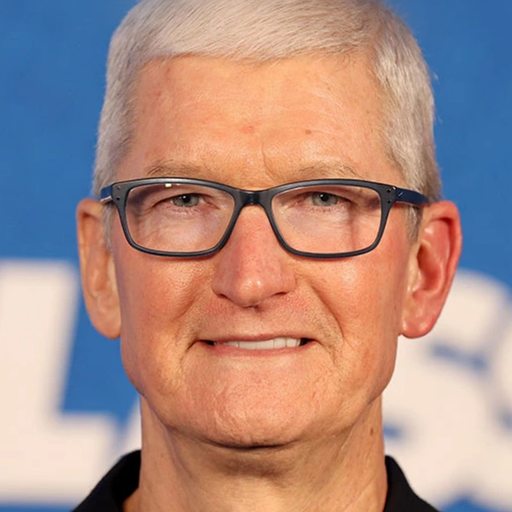

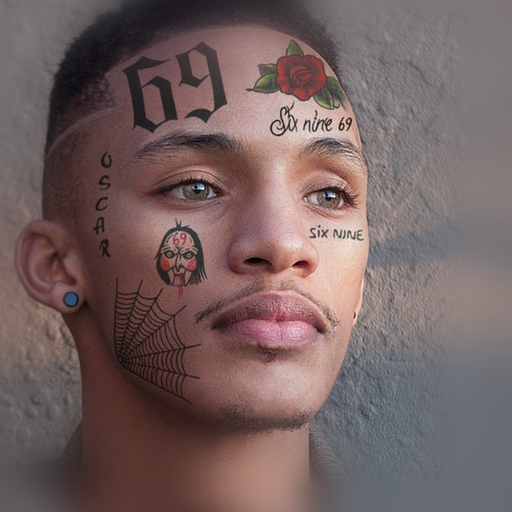

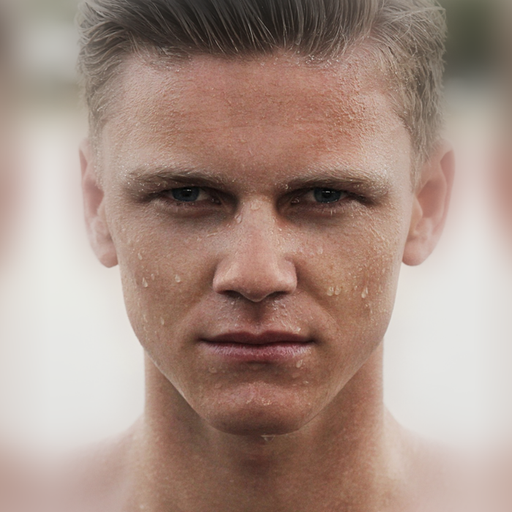

We present a high-fidelity 3D generative adversarial network (GAN) inversion framework that can synthesize photo-realistic novel views while preserving specific details of the input image. High-fidelity 3D GAN inversion is inherently challenging due to the geometry-texture trade-off in high-fidelity 3D inversion, where overfitting to a single view input image often damages the estimated geometry during the latent optimization. To solve this challenge, we propose a novel pipeline that builds on the pseudo-multi-view estimation with visibility analysis. We keep the original textures for the visible parts and utilize generative priors for the occluded parts. Extensive experiments show that our approach achieves advantageous reconstruction and novel view synthesis quality over state-of-the-art methods, even for images with out-of-distribution textures. The proposed pipeline also enables image attribute editing with the inverted latent code and 3D-aware texture modification. Our approach enables high-fidelity 3D rendering from a single image, which is promising for various applications of AI-generated 3D content.

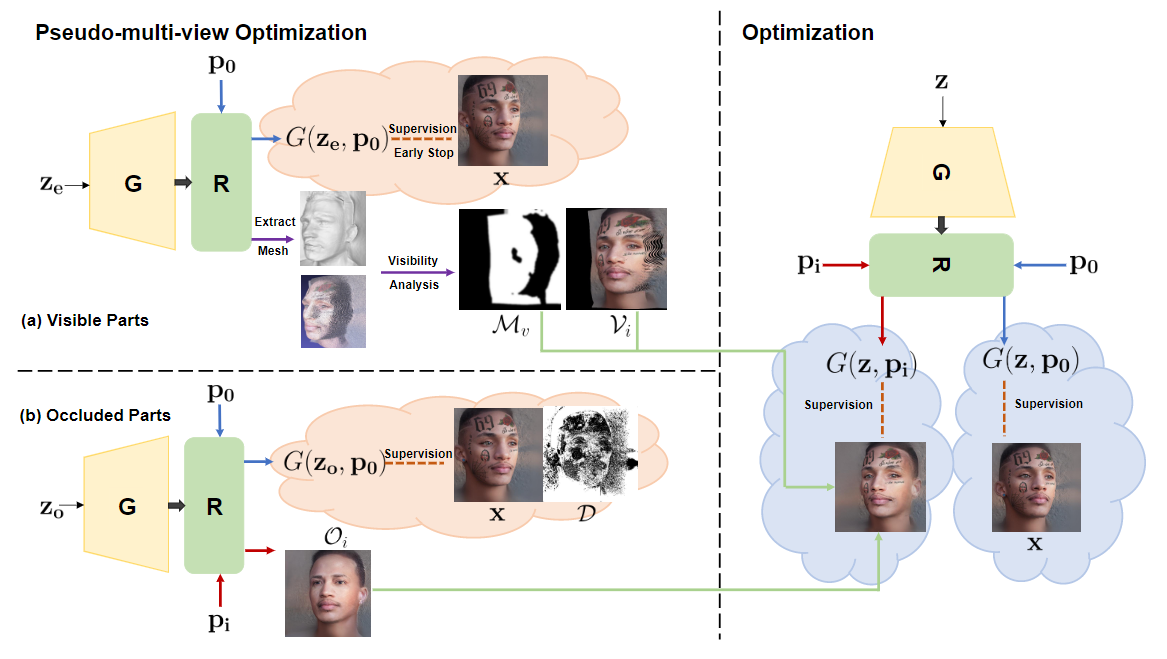

We divide the pseudo-multi-view estimation into two steps. Given a novel view, the synthesized image is expected to contain some parts visible from the input image. As only a single input view is provided, certain parts are occluded in the novel view. For the visible parts, we can directly warp the original textures from the input image. For the occluded parts, the pretrained generator G is able to synthesize various photo-realistic 3D consistent images, which can be utilized to inpaint the occluded areas.